Announcing Alif 1.0: Our First Urdu LLM outperforming other Open Source LLMs

We are thrilled to announce Alif 1.0, our first-ever Urdu-English LLM, setting a new benchmark in multilingual AI. Specifically optimized for Urdu, Alif addresses critical challenges in Urdu NLP and brings significant advancements in reasoning, fluency, and cultural alignment. This launch marks a significant milestone in making AI more accessible and accurate for 250 million Urdu speakers worldwide.

Why Alif Matters

Developing a high-performing Urdu LLM presents several hurdles:

- Most multilingual LLMs struggle with Urdu, often producing inconsistent or extremely hallucinated responses. They also sometimes insert foreign characters during Urdu text generation.

- Lack of High-Quality Datasets: Urdu lacks a reliable, instruction-tuned dataset for effective training.

- Translation Limitations: Direct translation is not enough, often resulting in fluency loss and cultural misalignment, highlighting the need for native Urdu data generation.

- Reasoning & Safety Challenges: Urdu’s right-to-left script conflicts with left-to-right reasoning tasks, while existing safety frameworks fail to align with regional requirements.

- Culturally-Aware AI is Crucial: There's a critical need for AI models that understand and respect the nuances of low-resource languages.

- Meta-Funded Initiative (LARGE): Our Meta-backed project tackles these challenges head-on, ensuring robust Urdu-language LLM development.

How Our Approach Solves These Challenges:

To overcome these challenges, we have designed Alif 1.0 8B Instruct model, a powerful Urdu-English model using multilingual synthetic data distillation:

- First High-Quality Urdu Alpaca Dataset: Alif is trained on a high-quality Urdu Alpaca dataset, generated through multilingual synthetic data techniques and human feedback refinement. The dataset includes:

- Classification

- Sentiment Analysis

- Logical Reasoning with Urdu Chain-of-Thought (CoT)

- Question Answering (QA)

- Text Generation

- Bilingual Translations

- Ethics & Safety Assessments

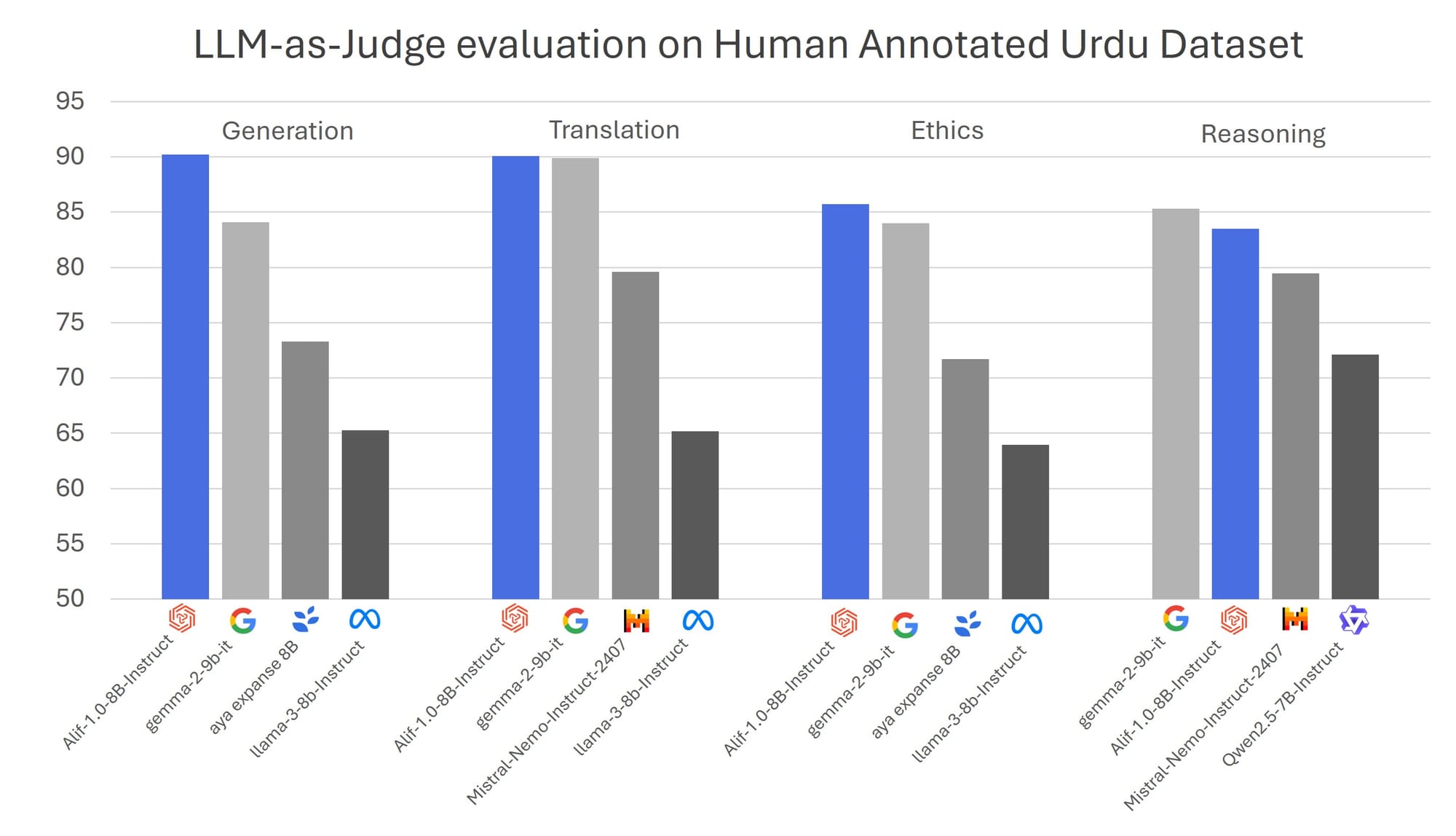

Additionally, we have developed an human annotated Urdu evaluation suite, including Urdu red-teaming datasets to assess safety and robustness.

- Enhanced Urdu Reasoning CapabilitiesWe have integrated Urdu-native CoT prompts and improved logical reasoning tasks to enhance the model’s understanding. This approach also ensures better contextual comprehension, making sentiment analysis and classification more precise.

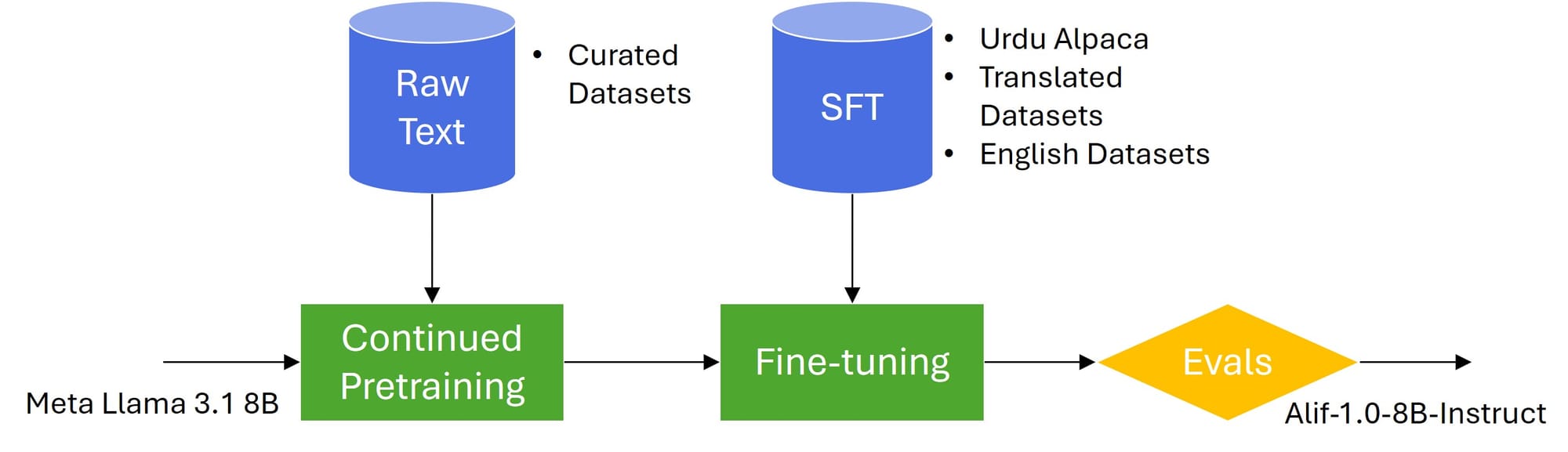

- Optimized Training Pipeline for EfficiencyOur efficient and cost-effective training approach includes:

- Continued Pretraining: We have leveraged Urdu Wikipedia and other curated data sources to strengthen foundational knowledge of Urdu language.

- Fine-Tuning: For fine-tuning, the synthetic dataset is merged with translated Urdu datasets and a small portion of English data for replay, preventing catastrophic forgetting.

Alif-1.0-8B-Instruct

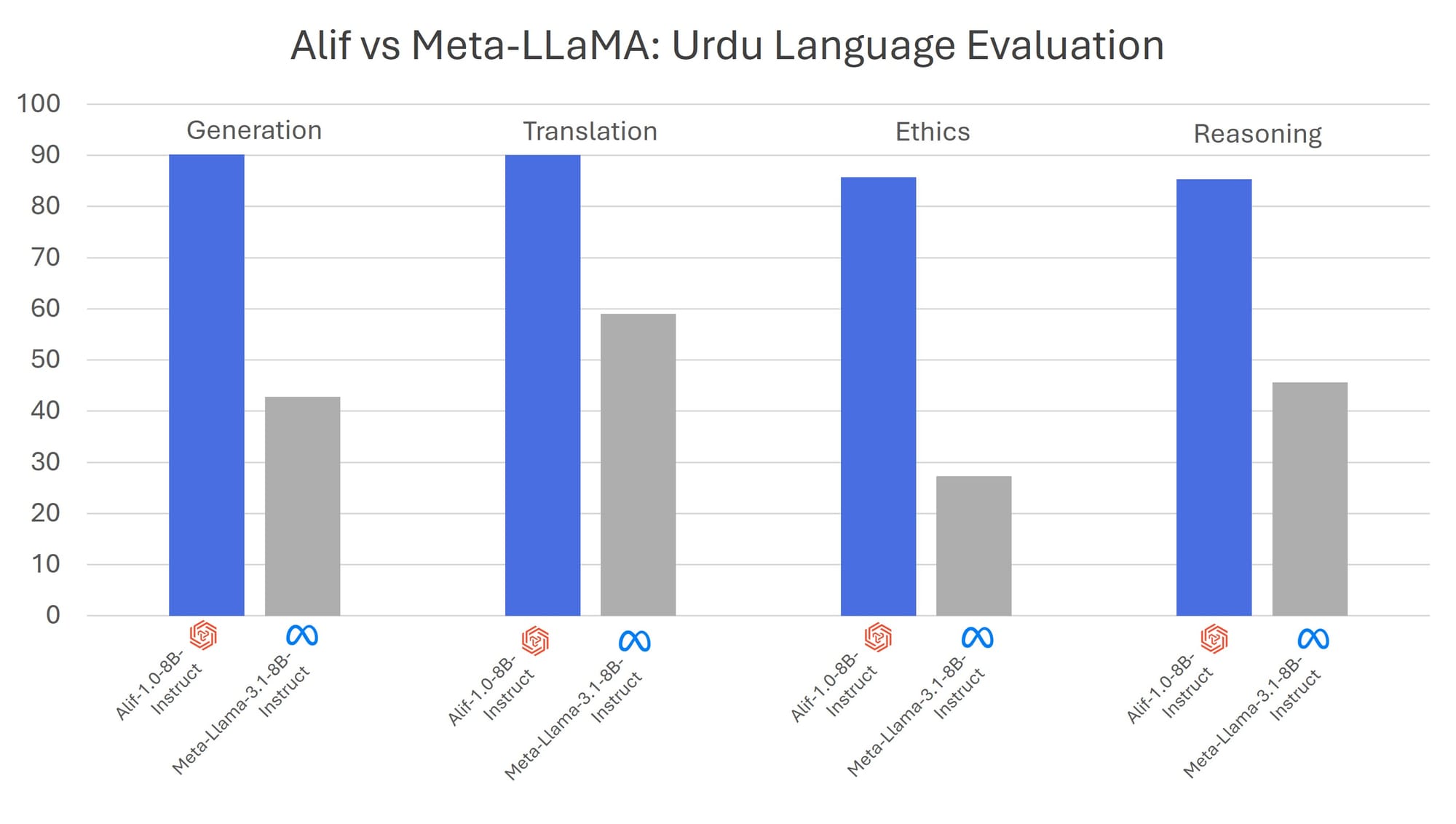

- State-of-the-Art Performance on a BudgetBy employing high-quality synthetic data distillation, we have enhanced Meta Llama 3.1 8B’s Urdu capabilities significantly. Alif now outperforms Meta Llama 3.1 8B Instruct in Urdu-specific tasks while maintaining strong English fluency. It also outperforms many open-source multilingual LLMs available such as Gemma 2 9B, Llama 3.1 8B, Mistral Nemo 12B, Qwen 2.5 7B and Cohere Aya Expanse 8B, all within a budget of under $100.

What’s Next?

- Gather more data to enhance the model’s knowledge and understanding.

- Apply Model Merging and other RL techniques to improve bilingual and reasoning capabilities.

- Conduct further evaluations and benchmarking.

Alif is a monumental step forward for Urdu NLP, ensuring cultural and linguistic alignment while expanding bilingual AI capabilities. Stay tuned for more updates as we continue to push the boundaries of AI innovation!

Model Card: https://huggingface.co/large-traversaal/Alif-1.0-8B-Instruct

TextStreamer Colab: https://colab.research.google.com/drive/1mEPynC__uN2tKDvDho3f6MpcKW-GMiAh?usp=sharing

Gradio Colab: https://colab.research.google.com/drive/1DUwlYBOMUd7FZaI631-y6y8fTNiy0pqt?usp=sharing

It is also available in GGUF with various quantized formats for Ollama, LM Studio, Jan, and Llama.cpp.

Datasets and Codes will be shared soon

For errors or additional questions about details in this model card, contact: contact@traversaal.ai